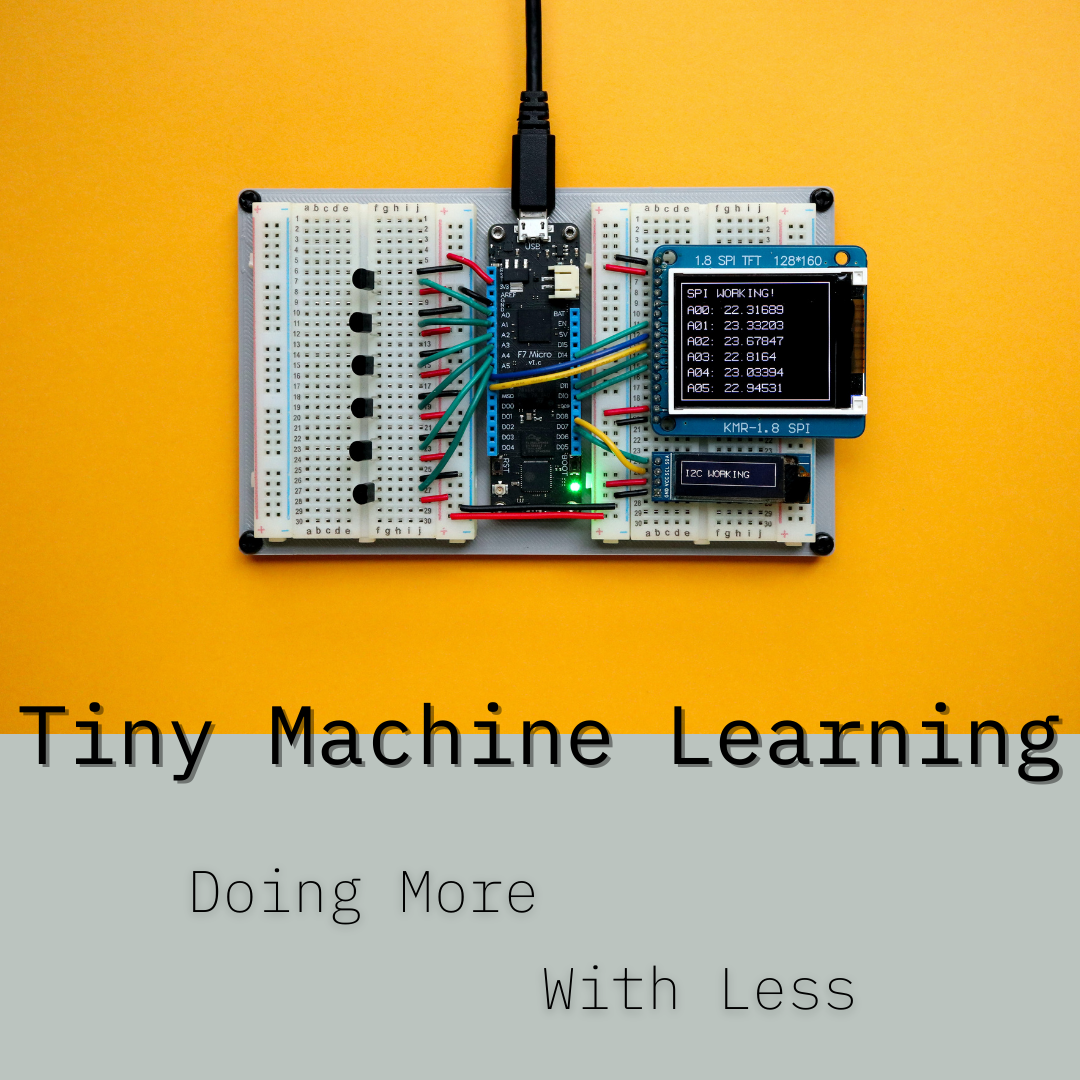

Tiny Machine Learning

The future is now, and it looks like this machine learning technique, also known as TinyML, inhabits a fast-growing field, developing to promote faster data analysis where bandwidth, energy usage, or time are of particular importance. TinyML involves algorithms that take up the least space to run on low-powered hardware or resource-constrained systems.

Machine Learning (“ML”), as Emily Shaw of ATI explains in our previous blog on AI is Everywhere, “Is the idea that a machine can act more efficiently when forming its algorithms based on the information it is consistently receiving rather than being built with a set of pre-programmed expectations.” Tiny Machine Learning strives to solve two common problems of ML— cost and power efficiency. It does so by making possible data analytics performance on low-powered hardware with low processing power and small memory size, helped by software made explicitly for small-sized inference, which is the process of putting an ML model into production.

Doing More With Less

We’ll need to delve into the embedded system-level to refer to the previously mentioned resource-constrained systems and understand the need to do more with less (less referring to the resource-constrained methods system-level previously mentioned).

Let’s talk about IoT (Internet of Things) devices. IoT devices connect to a larger, internet-connected device. They collect data and send it back to a centralized server where machine learning takes place. Examples include sensors of all types, wearable activity trackers, microphones, and RFID transmitters. The embedded systems of such devices are tiny, making the utilization of TinyML crucial if we’re interested in making these devices bright at the embedded level.

TinyML technology, which has gained significant momentum over the last couple of years and is projected to reach more than $70 billion in economic value in the next five years, is powering a vast growth in ML implementation. Bypassing the resource constraints that previously restricted machine learning deployment allows machine learning to do more.

“ML carried out solely in the cloud can be expensive in terms of device battery power, network bandwidth, and time to transmit data to the data center,” explains Steve Roddy, vice president of product marketing for Arm’s Machine Learning Group. “All of these costs can limit how widespread the adoption of ML becomes. TinyMCE enables ML to be done globally, as the ML inference can be carried out on the device where the data is generated.”

What The Future Holds

As early as 2022, we will see TinyML being utilized for home appliances, cars, industrial equipment such as remote wind turbines, agricultural machinery, healthcare, conservation, and military devices, among other things.

TinyMCE is already helping to protect elephants in India, and many other countries are looking into the possibilities of saving their wildlife using this technology. Researchers from the Polytechnic University of Catalonia have found a way to use TinyML to reduce fatal collisions with elephants on the Siliguri-Jalpaiguri railway line. TinyMCE is also helping preserve whales in the waters around Vancouver and Seattle, where they are often stuck in busy shipping lanes. Sensors and embedded machine learning perform continuous real-time monitoring to warn ships of nearby whales.

TinyMCE, for many, is also about giving power back to small companies and individual developers. “Instead of the big guys owning the whole thing, the guy who runs the farm, or the guy who runs the supermarket, or the guy who runs the factory — they do it locally,” said Evgeni Gusev, chairman of the board of TinyML Foundation and senior director of engineering at Qualcomm. “Anyone with no background in data science, special math training, or special programming can develop their stuff.”

TinyML has come a long way and shows no signs of stopping. The beneficial impact it will continue to have on resource-constrained systems is incredible. With low cost, low latency, small power, and minimal connectivity requirements, TinyML provides solutions to many real-world problems.

For more information about TinyML, I’ve provided several links of interest.

Leave A Comment